To accelerate the process of fact checking and online misinformation detection, one approach is to exert the advantage of crowdsourcing and analyze underlying characteristics of people’s reactions to one potential false claim. Take a false claim posted on Twitter about an alleged shooting in Ottawa as an example. Some users showed surprise and disagreement, and asked for further clarifications in their replies while other users believed the claim and re-tweeted it as if it was true. One of our WWW ’19 papers developed a stance classification model to analyze positions of evidence [1]. On social media platforms, potential evidence can be people’s replies to a claim, which is dealt with in our second WWW ’19 paper [2].

The full texts of these publications can be found here and here.

From Stances’ Imbalance to their Hierarchical Representation and Detection

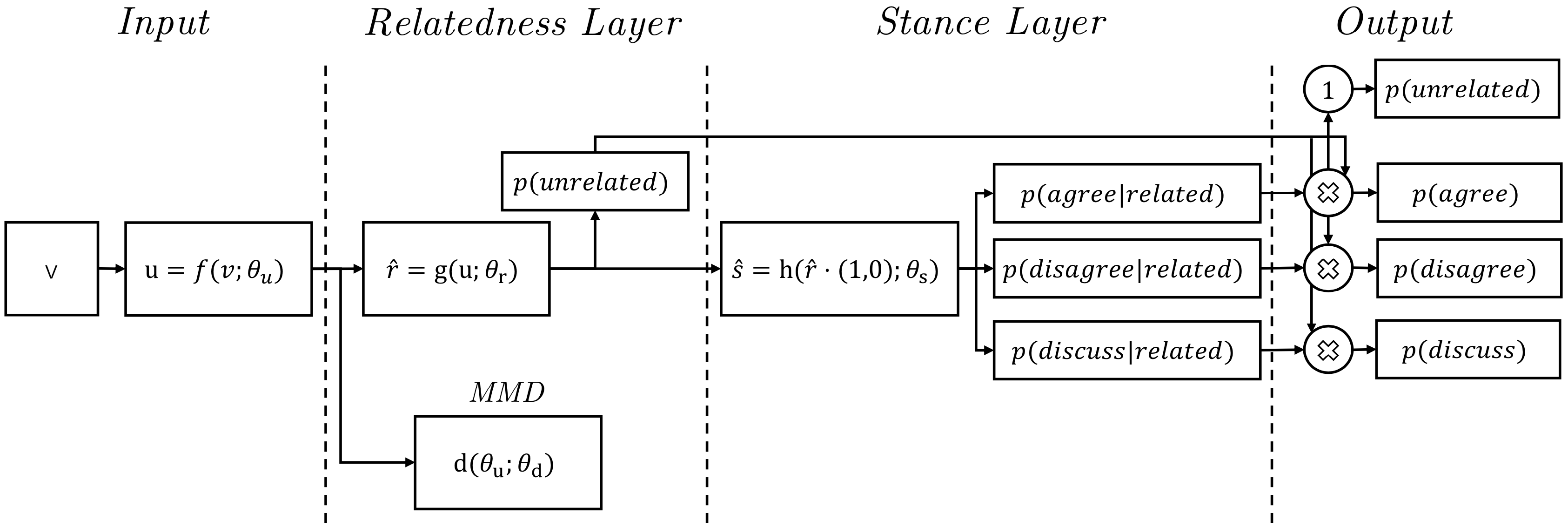

Generally, stances can be categorized into three classes: agree, disagree, and discuss (neutral). One more class, i.e., unrelated, is added to deal with noise brought by irrelevant post information, e.g. advertisement. Previous methods tackle the task as a multiclass classification problem, neglecting the hierarchical structure in stance classes. Also, the commonly-used four-way classifiers are easily influenced by the class imbalance problem. We address this issue by modeling the stance detection task as a two-layer neural network.

The first layer aims at identifying the relatedness of the evidence, while the second layer aims at classifying, those evidences identified as related, into the other three classes: agree, disagree and discuss. Moreover, by studying various level of dependence assumptions between the two layers:

- independent, when there is no error propagation between the two layers;

- dependent, when the error propagation is left free, and;

- learned, when the error propagation is controlled by Maximum Mean Discrepancy (MMD).

We show that when learned, the neural network (a) better separates the distributions of related and unrelated stances and (b) outperforms the state-of-the-art accuracy for the stance detection task.

Reply-aided Detection of Misinformation via Bayesian Deep Learning

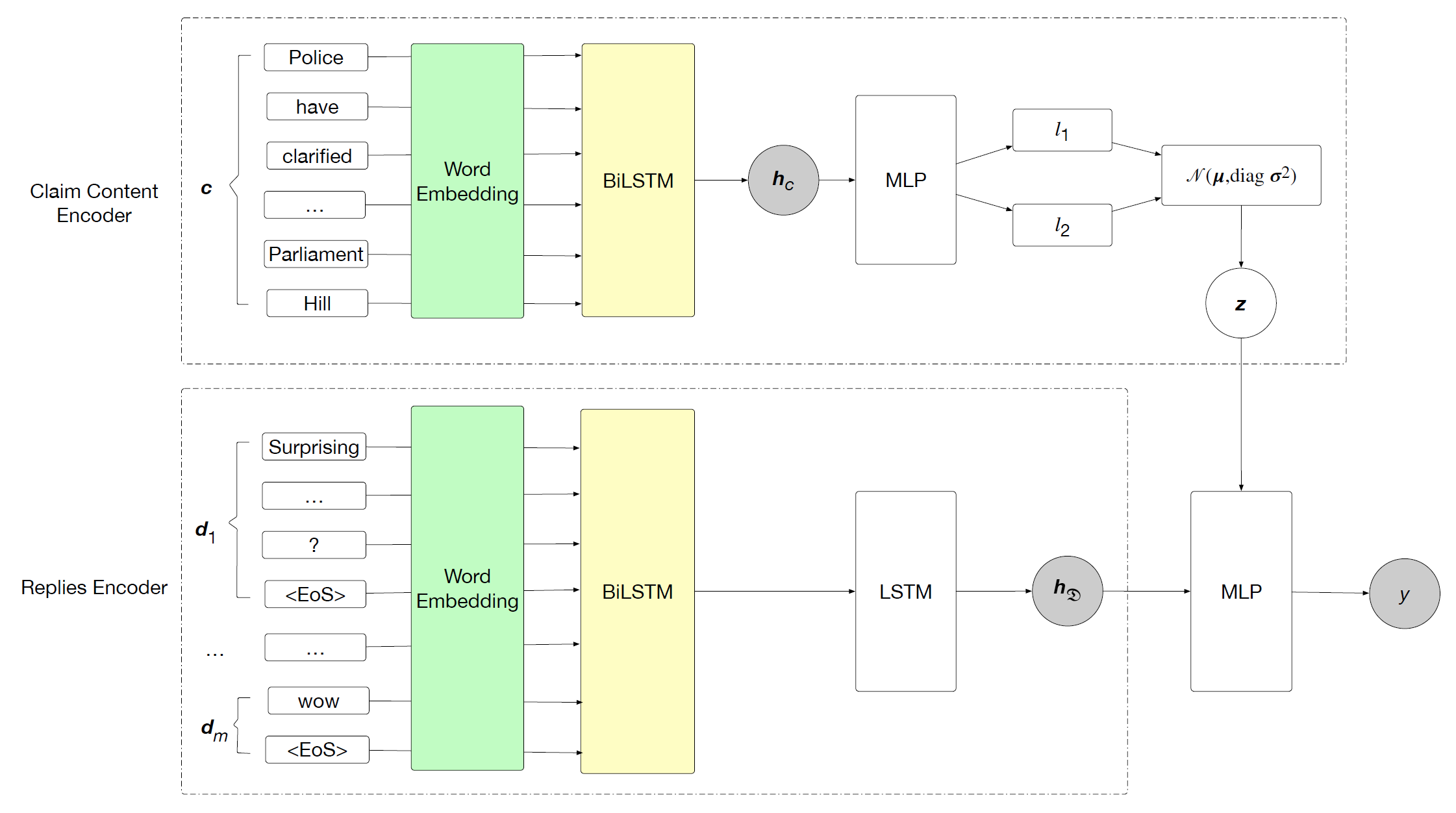

On social media platforms, people’s replies towards a claim reflect their reactions and comments, which might be seen as potential evidence. Most existing models employ feature engineering or deep learning to extract features from claims’ content and auxiliary information such as people’s replies. However, these models generate deterministic mappings to capture the difference between true or false claims. A major limitation of these models is their inability to represent uncertainty caused by incomplete or finite available data about the claim being examined.

Blocks and nodes represent computation modules and variables. Grey nodes are observed variables while blank nodes are latent variables. Blocks of the same color denote the same module.

We address this problem by proposing a Bayesian deep learning model, which incorporates stochastic factors to capture complex relationships between the latent distribution and the observed variables. The proposed model makes use of the claim content and replies content. In order to train the proposed Bayesian deep learning model, due to the analytical intractability of the posterior distribution, we develop a Stochastic Gradient Variational Bayes algorithm. A tractable Evidence Lower BOund (ELBO) objective function of our model is derived to approximate the intractable distribution. The model is optimized along the direction of maximizing the ELBO objective function.

Zhang Qiang

References

[1] From Stances’ Imbalance to their Hierarchical Representation and Detection. Qiang Zhang, Shangsong Liang, Aldo Lipani, Zhaochun Ren and Emine Yilmaz.

[2] Reply-aided Detection of Misinformation via Bayesian Deep Learning. Qiang Zhang, Aldo Lipani, Shangsong Liang and Emine Yilmaz.